Did you know that 79% of organizations are already leveraging Generative AI technologies? Much like the internet defined the 90s and the cloud revolutionized the 2010s, we are now in the era of Large Language Models (LLMs) and Generative AI.

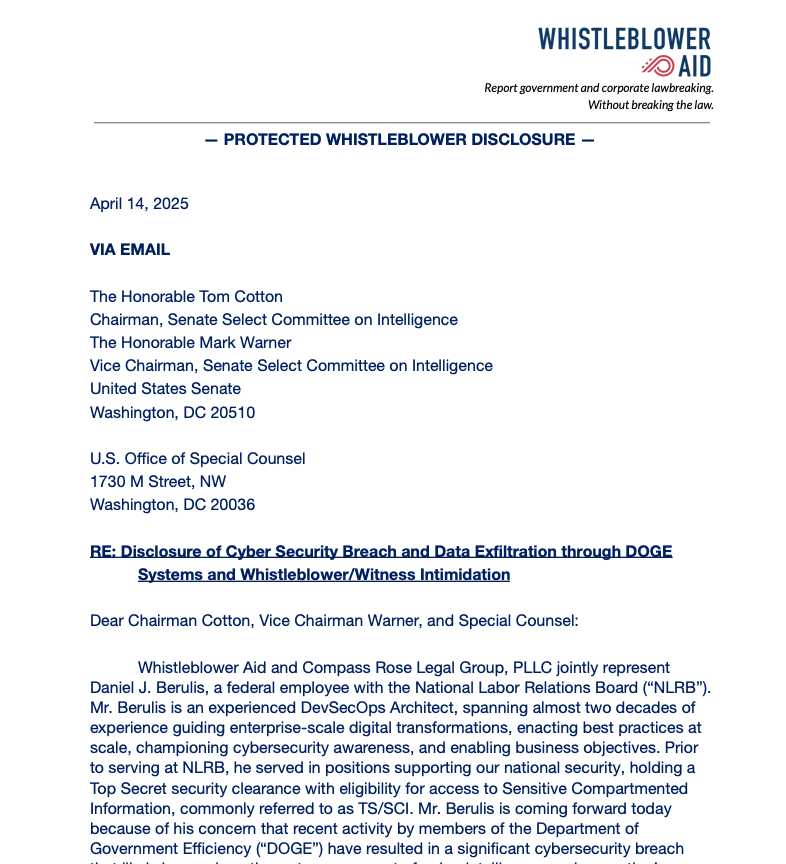

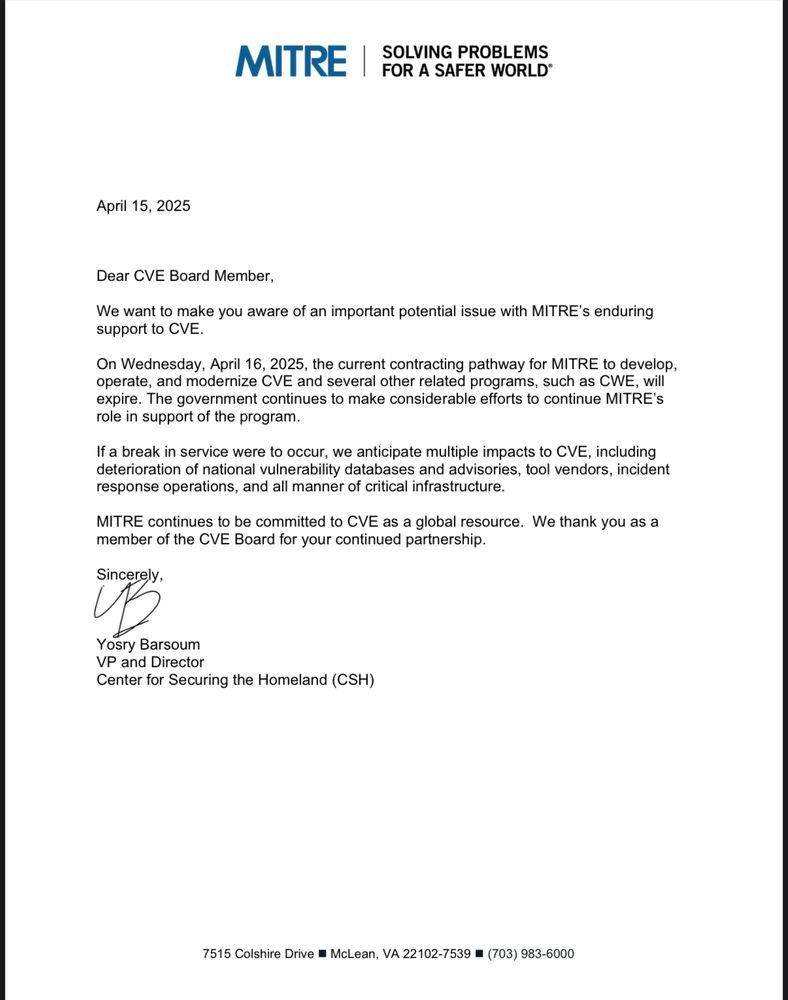

The potential of Generative AI is immense, yet it brings significant challenges, especially in security integration. Despite their powerful capabilities, LLMs must be approached with caution. A breach in an LLM’s security could expose the data it was trained on, along with sensitive organizational and user information, presenting a considerable risk.

Join us for an enlightening session with Elad Schulman, CEO & Co-Founder of Lasso Security, and Nir Chervoni, Booking.com’s Head of Data Security. They will share their real-world experiences and insights into securing Generative AI technologies.

Why Attend?

This webinar is a must for IT professionals, security experts, business leaders, and anyone fascinated by the future of Generative AI and security. It’s your comprehensive guide to the complexities of securing innovation in the age of generative artificial intelligence.

What You’ll Learn:

- How GenAI is Reshaping Business Operations: Explore the current state of GenAI and LLM adoption through statistics and insightful business case studies.

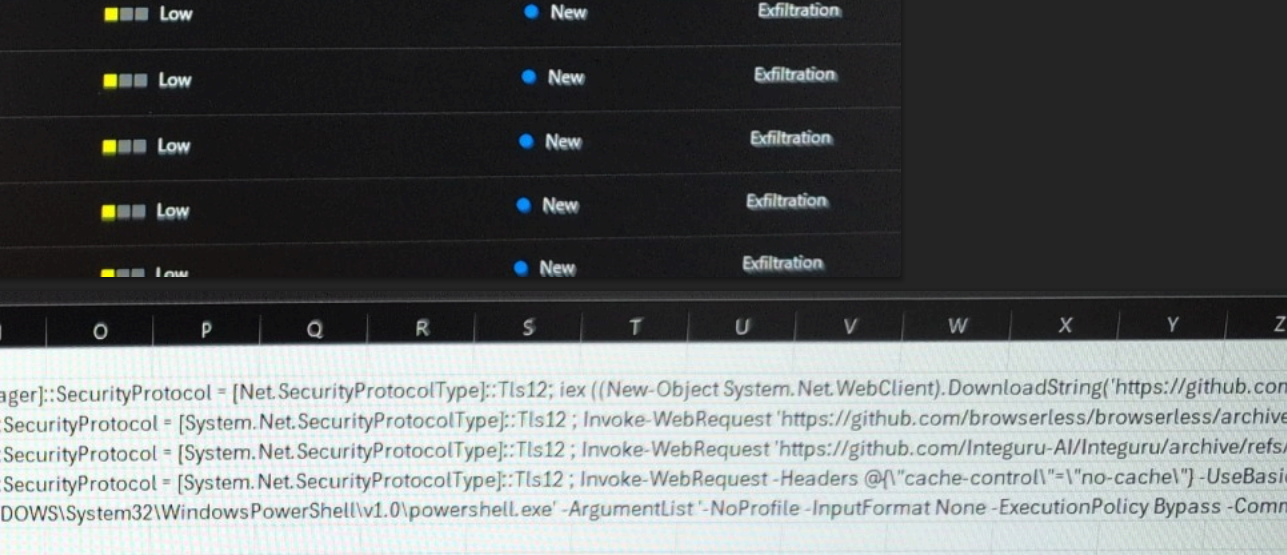

- Understanding Security Risks: Dive into the emerging security threats posed by Generative AI.

- Effective Security Strategies for Businesses: Gain insights into proven strategies to navigate GenAI security challenges.

- Best Practices and Tools: Discover best practices and tools for effectively securing GenAI applications and models.

Register Now for Expert-Led Insights

Don’t miss this opportunity to dive deep into the transformative potential of Generative AI and understand how to navigate its security implications with industry experts. Unlock the strategies to harness GenAI for your business securely and effectively.